- Pricing

- Social Medias supported

- Use case

Comment Moderation: Why It Matters for Founders

Over 80 percent of American online platforms identify comment moderation as vital for community safety. The rapid growth of digital conversations means even a single unchecked comment can tarnish brand reputation or stir conflict. Understanding what drives comment moderation and how it works empowers anyone managing an American platform to build spaces where discussions stay productive and respectful.

Table of Contents

- Defining Comment Moderation and Its Purpose

- Manual Vs. Automated Moderation Methods Compared

- Key Processes and Tools in Modern Moderation

- Protecting Brand Reputation and Community Safety

- Legal Responsibilities and Moderation Risks Explained

Key Takeaways

| Point | Details |

|---|---|

| Importance of Comment Moderation | Comment moderation is essential for maintaining quality discourse and ensuring a safe online environment for users. It balances free expression with community safety. |

| Hybrid Moderation Approach | Effective moderation combines automated techniques with human oversight to balance speed and accuracy in content filtering. |

| Legal Implications | Digital platforms must navigate complex legal responsibilities in content moderation, ensuring they protect user rights while preventing harmful content. |

| Proactive Strategies for Brands | Brands should implement robust moderation tools and clear guidelines to foster a positive communication culture and protect their reputation online. |

Defining Comment Moderation and Its Purpose

Comment moderation is the strategic process of monitoring, filtering, and managing user-generated content across digital platforms to maintain quality, safety, and constructive dialogue. At its core, comment moderation represents a critical intervention that protects online spaces from potentially harmful interactions while preserving meaningful community engagement.

Online platforms face significant challenges in maintaining healthy discourse. Research exploring challenges of reader comments on news platforms highlights the necessity of systematic comment moderation to prevent the spread of inappropriate or offensive content. By implementing robust moderation strategies, organizations can create safer digital environments that encourage respectful communication and minimize toxic interactions.

The purpose of comment moderation extends beyond simple content filtering. It involves complex decision making about what constitutes acceptable communication, understanding contextual nuances, and balancing free expression with community safety. Interventions during content creation can effectively reduce offensive interactions, demonstrating that proactive moderation approaches are more powerful than reactive measures.

Moderation techniques vary widely, ranging from automated AI filtering to human review processes. Effective strategies often combine technological tools with human oversight to capture subtle communication dynamics that algorithms might miss. This hybrid approach allows platforms to maintain authenticity while protecting users from harmful content.

Pro Tip: Comment Moderation Strategy: Develop clear, transparent community guidelines that outline acceptable communication standards, and consistently enforce these rules to build trust and maintain a positive online environment.

Manual vs. Automated Moderation Methods Compared

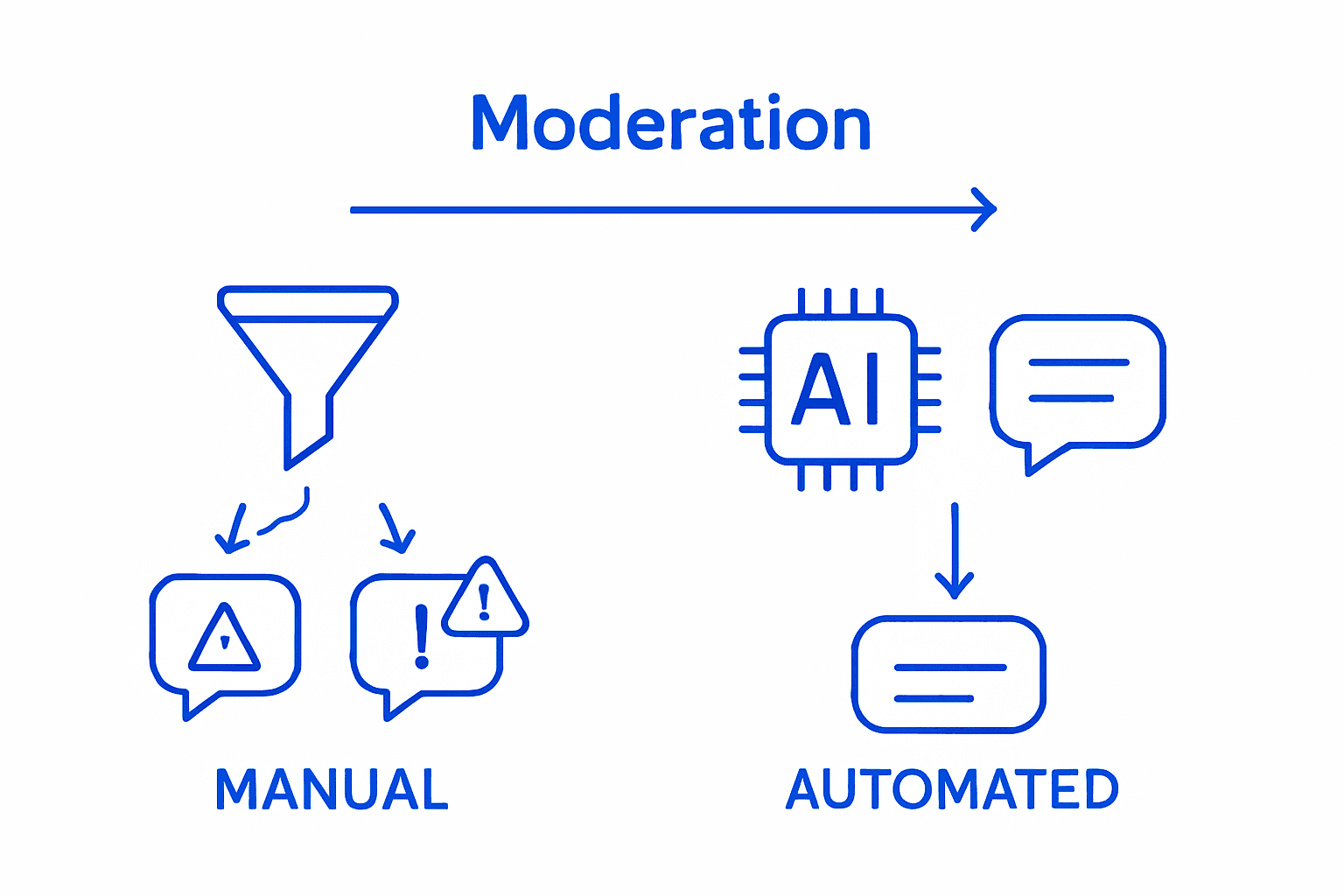

Comment moderation strategies fundamentally diverge between manual and automated approaches, each presenting unique advantages and challenges for digital platforms. The choice between these methods significantly impacts a platform’s ability to maintain quality discourse and user engagement.

Comparative research on linguistic annotation methods reveals nuanced differences between manual and automated content moderation techniques. Manual moderation relies on human judgment to evaluate context, tone, and subtle communication nuances that automated systems might overlook. Human moderators can understand complex cultural references, detect sarcasm, and make contextually informed decisions that pure algorithmic approaches struggle to interpret.

Automated moderation systems, powered by artificial intelligence and machine learning, offer scalability and rapid processing capabilities that human teams cannot match. Sentiment analysis techniques demonstrate significant potential in automated content evaluation, enabling platforms to quickly filter potentially harmful content. These systems can process thousands of comments per minute, applying predefined rules and identifying problematic language patterns with remarkable efficiency.

However, automated moderation is not without limitations. AI systems can sometimes misinterpret context, leading to false positives or missed nuanced offensive content. The most effective moderation strategies often incorporate a hybrid approach, combining automated filtering with periodic human review to balance speed, accuracy, and contextual understanding.

Here’s a side-by-side comparison of manual, automated, and hybrid comment moderation methods:

| Method | Core Strength | Key Limitation | Best Use Case |

|---|---|---|---|

| Manual | Context understanding | Not easily scalable | Small platforms, nuanced topics |

| Automated | High speed and volume | Misses contextual subtleties | Large sites, real-time needs |

| Hybrid | Balanced accuracy/speed | Requires coordination | Diverse, growing communities |

Pro Tip: Hybrid Moderation Strategy: Implement a layered moderation approach that uses AI for initial screening and human reviewers for complex or ambiguous cases, ensuring both efficiency and contextual accuracy.

Key Processes and Tools in Modern Moderation

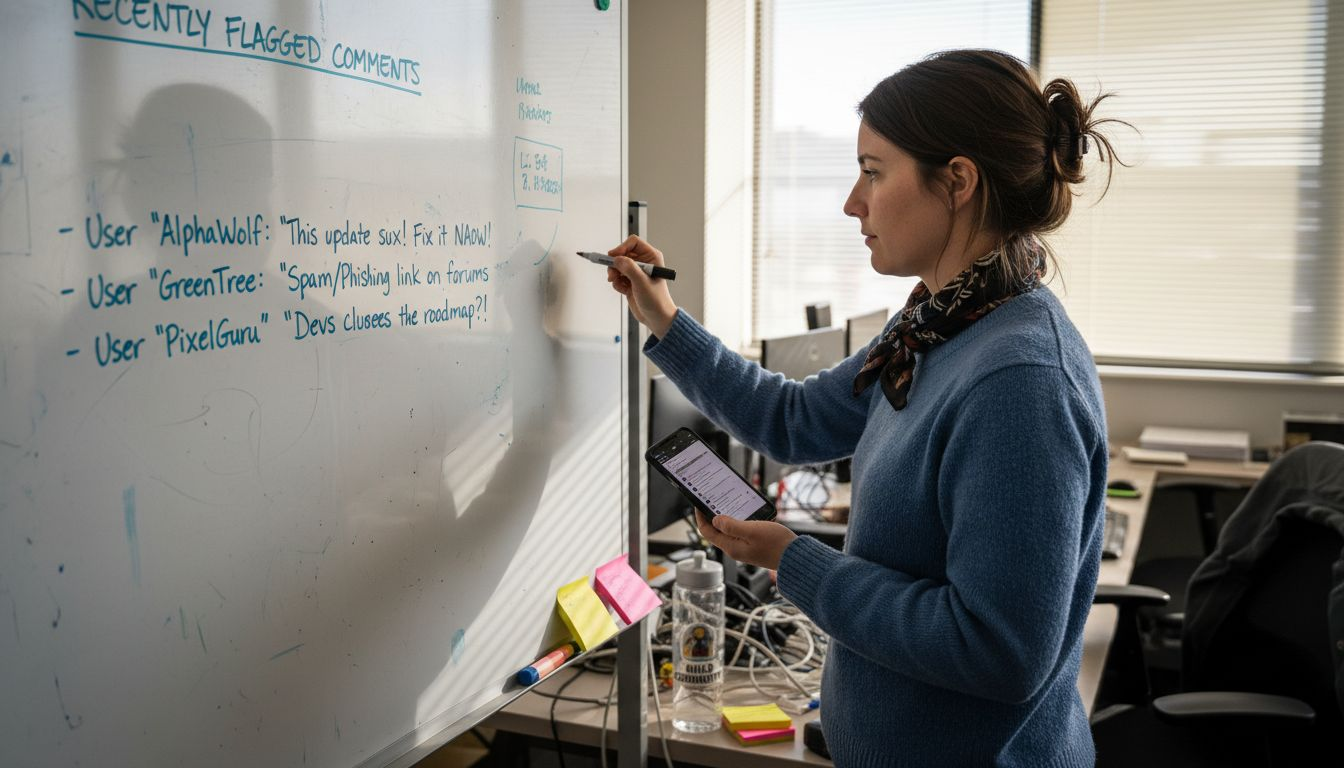

Modern content moderation encompasses a sophisticated ecosystem of technological and human-driven processes designed to maintain digital platform integrity. These systems have evolved dramatically, integrating advanced technological solutions with nuanced human insights to create comprehensive moderation strategies.

Natural language processing techniques provide sophisticated frameworks for automated content moderation, enabling platforms to develop complex filtering mechanisms. Key technological tools include machine learning algorithms, sentiment analysis systems, and contextual understanding models that can rapidly assess user-generated content across multiple dimensions such as toxicity, intent, and potential harm.

The contemporary moderation toolset typically includes several critical components. Keyword filters represent the most basic level of automated moderation, blocking specific words or phrases. More advanced systems incorporate contextual analysis tools that evaluate entire comment structures, understanding nuanced language and detecting potentially problematic content beyond simple word matching. These sophisticated systems can recognize sarcasm, implicit threats, and complex linguistic manipulations that basic filters might miss.

Effective moderation strategies also integrate multiple layers of technological and human intervention. Automated systems provide initial screening, flagging potentially problematic content for human review. Human moderators then make final determinations, applying contextual understanding and cultural sensitivity that AI systems cannot fully replicate. This hybrid approach ensures both efficiency and depth of content assessment, balancing technological speed with human judgment.

Below is a summary of essential tools in modern content moderation and their main contributions:

| Tool Type | Primary Function | Added Value |

|---|---|---|

| Keyword Filters | Block flagged words/phrases | Simple, quick screening |

| Contextual Analysis AI | Analyze intent and tone | Detects nuanced harm |

| Human Review | Final content assessment | Applies cultural insight |

| Sentiment Analysis | Identify emotion/hostility | Flags subtle negativity |

Pro Tip: Moderation Tool Integration: Develop a comprehensive moderation strategy that combines multiple tools and approaches, creating layered defenses that can adapt to evolving communication patterns and potential abuse mechanisms.

Protecting Brand Reputation and Community Safety

Brand reputation represents a delicate ecosystem of public perception that can be dramatically influenced by the quality of online interactions and content associated with a company. Digital platforms have become critical battlegrounds where brands must actively manage their image and protect their community from potentially damaging communications.

Research examining content creators’ comment moderation strategies reveals how seemingly minor interactions can significantly impact brand perception. The strategic pinning or highlighting of specific comments can subtly shape community norms and audience expectations, making moderation a powerful tool for narrative control and reputation management.

Advanced natural language processing methods for toxicity detection demonstrate the technological sophistication now available to brands seeking to maintain safe digital environments. Modern moderation tools go beyond simple keyword blocking, utilizing complex algorithmic approaches that can detect nuanced forms of harmful communication. These systems analyze context, intent, and potential emotional impact, providing brands with granular control over their online discourse.

Effective brand protection requires a proactive and multilayered approach to community management. This involves not just filtering out obviously offensive content, but creating a positive communication culture that encourages constructive dialogue. Brands must develop clear community guidelines, implement robust moderation tools, and consistently enforce standards that reflect their core values and professional identity.

Pro Tip: Reputation Defense Strategy: Develop a comprehensive communication policy that outlines acceptable interaction standards, and train both automated systems and human moderators to consistently enforce these guidelines across all digital platforms.

Legal Responsibilities and Moderation Risks Explained

Legal liability in digital content moderation represents a complex landscape where platforms must carefully navigate potential risks and regulatory requirements. Digital platforms bear significant responsibilities for the content shared within their ecosystems, creating a challenging environment for founders and community managers.

Comprehensive research addressing the complexities of automated content moderation reveals the intricate legal challenges platforms face when implementing moderation strategies. Platform owners must balance protecting user rights, maintaining free expression, and preventing potentially harmful or illegal content from proliferating across their digital spaces.

The legal risks associated with content moderation extend beyond simple content filtering. Platforms can face substantial legal consequences for both over moderation and under moderation. Removing too much content might infringe on user speech rights, while insufficient moderation could expose platforms to liability for harmful or potentially illegal user-generated content. This delicate balance requires sophisticated, nuanced approaches that integrate legal expertise with technological capabilities.

Moderation strategies must consider multiple legal dimensions, including privacy regulations, intellectual property rights, and potential liability for user interactions. Founders must develop comprehensive policies that clearly outline acceptable content standards, provide transparent moderation processes, and maintain robust documentation of moderation decisions. This approach helps mitigate potential legal risks and demonstrates a proactive commitment to responsible platform management.

Pro Tip: Legal Protection Strategy: Consult with legal professionals specializing in digital platform regulations to develop a comprehensive moderation policy that balances user rights, platform protection, and regulatory compliance.

Enhance Your Brand’s Voice with Smart Comment Moderation and Engagement

Navigating the complexities of comment moderation while maintaining authentic community engagement presents a real challenge for founders and creators. The article highlights key pain points such as balancing content quality, brand reputation protection, and legal responsibilities with scalable moderation solutions. At Commentions, we understand that effective moderation requires a blend of contextual understanding and timely action to foster meaningful conversations and positive brand perception.

Take control of your brand narrative by leveraging AI-driven, human-like comments that amplify your presence without compromising authenticity. With Commentions, you can automatically engage on high-impact posts, ensuring your brand voice is always aligned with your goals while easing the moderation burden. Don’t wait to build a safer, more visible, and credible digital community. Learn more about how we help founders grow by visiting our landing page and discover how smart comment engagement complements robust moderation strategies at Commentions.com. Start transforming your comment moderation challenges into opportunities today.

Frequently Asked Questions

What is comment moderation and why is it important?

Comment moderation is the process of monitoring and managing user-generated content on digital platforms. It is crucial for maintaining community safety, quality discourse, and protecting against harmful interactions.

What are the differences between manual and automated comment moderation?

Manual moderation relies on human judgment to understand context and nuances, while automated moderation uses AI to filter content at scale. Each has strengths; manual moderation excels in context understanding, whereas automated systems provide speed and efficiency.

How can comment moderation impact brand reputation?

Effective comment moderation helps shape the narrative around a brand by promoting positive interactions and filtering out harmful content. It plays a significant role in managing public perception and ensuring a safe space for community engagement.

What legal responsibilities do platforms have regarding comment moderation?

Platforms have legal obligations to balance user rights and content regulation. This includes preventing the spread of illegal or harmful content while respecting free speech. A comprehensive moderation policy must consider privacy laws and potential liabilities.

Recommended

- Commentions | What is a “Reply Guy” on Social Media? The FULL Definition.

- Commentions vs BrandMention : Review and Best Alternative (2025)

- Commentions | How to Make Sales as a Freelance: A Simple Twitter & LinkedIn Strategy That Works

- Commentions | Twitter Strategy for Consultants: How to Get Clients on X

.svg)

.webp)

.svg)

.webp)

.svg)

.svg)

.svg)

.svg)